In linux distros running systemd, like Ubuntu 15.04, adding -H tcp://0.0.0.0:2375 to /etc/default/docker does not have the effect it used to.

Note: Docker over TLS should run on TCP port 2376. Warning: As shown in the example above, you don’t need to run the docker client with sudo or the docker group when you use certificate authentication. That means anyone with the keys can give any instructions to your Docker daemon, giving them root access to the machine hosting the daemon. Configure Docker settings. After Docker is installed on your machine, modify the settings described below. You'll use the Docker Remote API, which requires the use of port 2375. This port will only be used internally by ArcGIS Notebook Server and should be protected from external use.

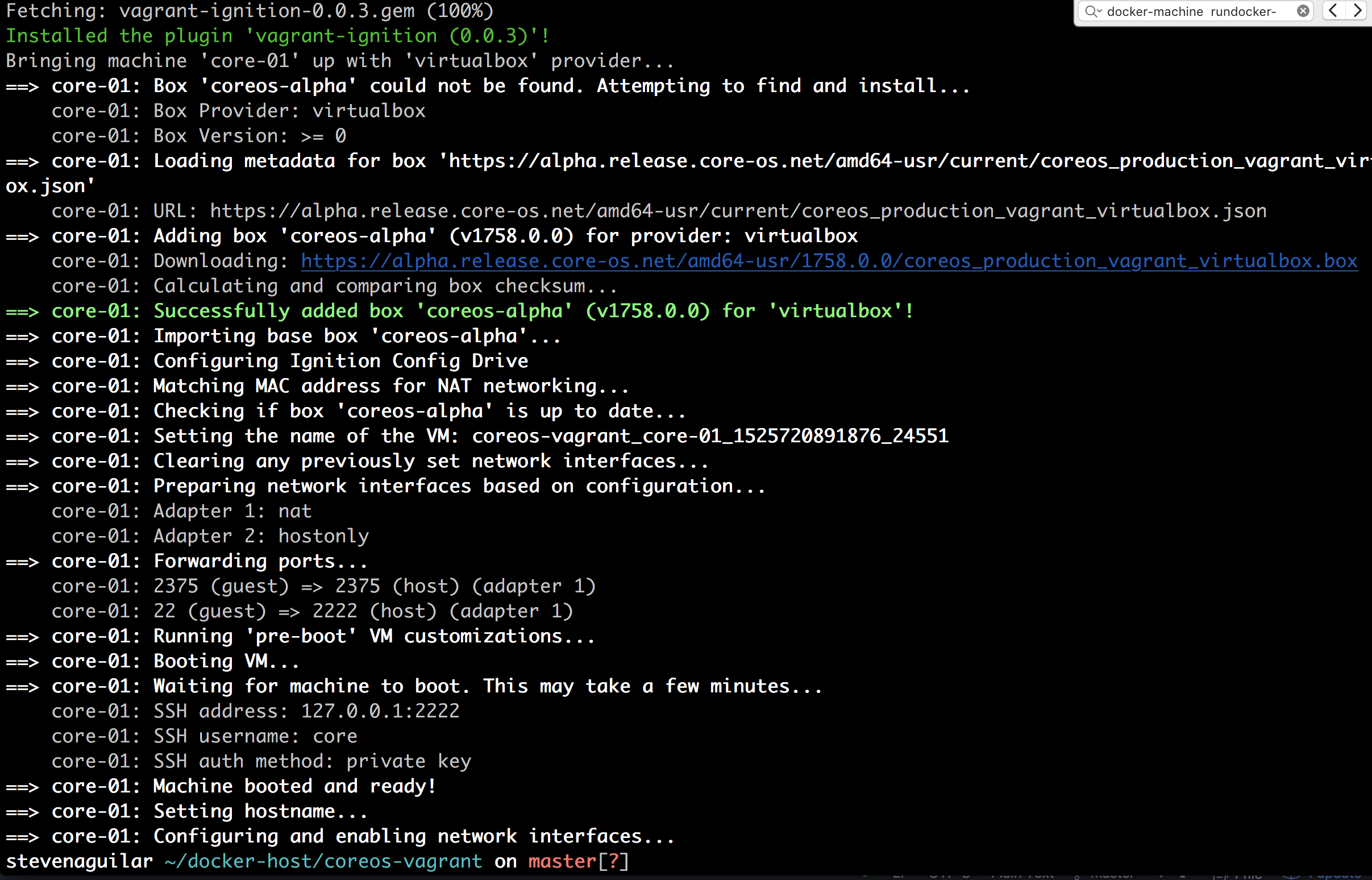

Instead, create a file called /etc/systemd/system/docker-tcp.socket to make docker available on a TCP socket on port 2375:

Then enable this new socket:systemctl enable docker-tcp.socketsystemctl enable docker.socketsystemctl stop dockersystemctl start docker-tcp.socketsystemctl start docker

Test that it's working:docker -H tcp://127.0.0.1:2375 ps

Source: https://coreos.com/docs/launching-containers/building/customizing-docker/

The Docker command line presents a seamless way of working with containers, and it’s easy to forget that the command line doesn’t really do anything itself--it just sends instructions to the API running on the Docker Engine. Separating the command line from the Engine has two major benefits--other tools can consume the Docker API, so the command line isn’t the only way to manage containers, and you can configure your local command line to work with a remote machine running Docker. It’s amazingly powerful that you can switch from running containers on your laptop to managing a cluster with dozens of nodes, using all the same Docker commands you’re used to, without leaving your desk.

Remote access is how you administer test environments or debug issues in production, and it’s also how you enable the continuous deployment part of your CI/CD pipeline. After the continuous integration stages of the pipeline have completed successfully, you’ll have a potentially releasable version of your app stored in a Docker registry. Continuous deployment is the next stage of the pipeline--connecting to a remote Docker Engine and deploying the new version of the app. That stage could be a test environment that goes on to run a suite of integration tests, and then the final stage could connect to the production cluster and deploy the app to the live environment. In this chapter you’ll learn how to expose the Docker API and keep it protected, and how to connect to remote Docker Engines from your machine and from a CI/CD pipeline.

15.1 Endpoint options for the Docker API

When you install Docker you don’t need to configure the command line to talk to the API--the default setup is for the Engine to listen on a local channel, and for the command line to use that same channel. The local channel uses either Linux sockets or Windows named pipes, and those are both network technologies that restrict traffic to the local machine. If you want to enable remote access to your Docker Engine, you need to explicitly set it in the configuration. There are a few different options for setting up the channel for remote access, but the simplest is to allow plain, unsecured HTTP access.

Enabling unencrypted HTTP access is a horribly bad idea. It sets your Docker API to listen on a normal HTTP endpoint, and anyone with access to your network can connect to your Docker Engine and manage containers--without any authentication. You might think that isn’t too bad on your dev laptop, but it opens up a nice, easy attack vector. A malicious website could craft a request to http:/ /localhost:2375, where your Docker API is listening, and start up a bitcoin mining container on your machine--you wouldn’t know until you wondered where all your CPU had gone.

I’ll walk you through enabling plain HTTP access, but only if you promise not to do it again after this exercise. At the end of this section you’ll have a good understanding of how remote access works, so you can disable the HTTP option and move on to more secure choices.

Try it now Remote access is an Engine configuration option. You can set it easily in Docker Desktop on Windows 10 or Mac by opening Settings from the whale menu and selecting Expose Daemon on tcp:/ /localhost:2375 Without TLS. Figure 15.1 shows that option--once you save the setting, Docker will restart.

Figure 15.1 Enabling plain HTTP access to the Docker API--you should try and forget you saw this.

If you’re using Docker Engine on Linux or Windows Server, you’ll need to edit the config file instead. You’ll find it at /etc/docker/daemon.json on Linux, or on Windows at C:ProgramDatadockerconfigdaemon.json . The field you need to add is hosts , which takes a list of endpoints to listen on. Listing 15.1 shows the settings you need for unsecured HTTP access, using Docker’s conventional port, 2375.

Listing 15.1 Configuring plain HTTP access to the Docker Engine via daemon.json

{ 'hosts': [ # enable remote access on port 2375: 'tcp://0.0.0.0:2375', # and keep listening on the local channel - Windows pipe: 'npipe://' # OR Linux socket: 'fd://' ], 'insecure-registries': [ 'registry.local:5000' ] }

You can check that the Engine is configured for remote access by sending HTTP requests to the API, and by providing a TCP host address in the Docker CLI.

Try it now The Docker command line can connect to a remote machine using the host argument. The remote machine could be the localhost, but via TCP rather than the local channel:

# connect to the local Engine over TCP:docker --host tcp://localhost:2375 container ls# and using the REST API over HTTP: curl http://localhost:2375/containers/json

The Docker and Docker Compose command lines both support a host parameter, which specifies the address of the Docker Engine where you want to send commands. If the Engine is configured to listen on the local address without security, the host parameter is all you need; there’s no authentication for users and no encryption of network traffic. You can see my output in figure 15.2--I can list containers using the Docker CLI or the API.

Figure 15.2 When the Docker Engine is available over HTTP, anyone with the machine address can use it.

Now imagine the horror of the ops team if you told them you wanted to manage a Docker server, so you needed them to enable remote access--and, by the way, that would let anyone do anything with Docker on that machine, with no security and no audit trail. Don’t underestimate how dangerous this is. Linux containers use the same user accounts as the host server, so if you run a container as the Linux admin account, root , you’ve pretty much got admin access to the server. Windows containers work slightly differently, so you don’t get unlimited server access from within a container, but you can still do unpleasant things.

When you’re working with a remote Docker Engine, any commands you send work in the context of that machine. So if you run a container and mount a volume from the local disk, it’s the remote machine’s disk that the container sees. That can trip you up if you want to run a container on the test server that mounts the source code on your local machine. Either the command will fail because the directory you’re mounting doesn’t exist on the server (which will confuse you because you know it does exist on your machine), or worse, that path does exist on the server and you won’t understand why the files inside the container are different from your disk. It also provides a useful shortcut for someone to browse a remote server’s filesystem if they don’t have access to the server but they do have access to the Docker Engine.

Try it now Let’s see why unsecured access to the Docker Engine is so bad. Run a container that mounts the Docker machine’s disk, and you can browse around the host’s filesystem:

# using Linux containers:docker --host tcp://localhost:2375 container run -it -v /:/host-drive diamol/base# OR Windows containers:docker --host tcp://localhost:2375 container run -it -v C::C:host-drive diamol/base# inside the container, browse the filesystem:lsls host-drive

You can see my output in figure 15.3--the user who runs the container has complete access to read and write files on the host.

Figure 15.3 Having access to the Docker Engine means you can get access to the host’s filesystem.

In this exercise you’re just connecting to your own machine, so you’re not really bypassing security. But if you find out the name or IP address of the server that runs your containerized payroll system, and that server has unsecured remote access to the Docker Engine--well, you might be able to make a few changes and roll up to work in that new Tesla sooner than you expected. This is why you should never enable unsecured access to the Docker Engine, except as a learning exercise.

Before we go on, let’s get out of the dangerous situation we’ve created and go back to the private local channel for the Docker Engine. Either uncheck the localhost box in the settings for Docker Desktop, or revert the config change you made for the Docker daemon, and then we’ll go on to look at the more secure options for remote access.

15.2 Configuring Docker for secure remote access

Docker supports two other channels for the API to listen on, and both are secure. The first uses Transport Layer Security (TLS)--the same encryption technique based on digital certificates used by HTTPS websites. The Docker API uses mutual TLS, so the server has a certificate to identify itself and encrypt traffic, and the client also has a certificate to identify itself. The second option uses the Secure Shell (SSH) protocol, which is the standard way to connect to Linux servers, but it is also supported in Windows. SSH users can authenticate with username and password or with private keys.

The secure options give you different ways to control who has access to your cluster. Mutual TLS is the most widely used, but it comes with management overhead in generating and rotating the certificates. SSH requires you to have an SSH client on the machine you’re connecting from, but most modern operating systems do, and it gives you an easier way to manage who has access to your machines. Figure 15.4 shows the different channels the Docker API supports.

Figure 15.4 There are secure ways of exposing the Docker API, providing encryption and authentication.

One important thing here--if you want to configure secure remote access to the Docker Engine, you need to have access to the machine running Docker. And you don’t get that with Docker Desktop, because Desktop actually runs Docker in a VM on your machine, and you can’t configure how that VM listens (except with the unsecured HTTP checkbox we’ve just used). Don’t try to follow the next exercises using Docker Desktop --you’ll either get an error telling you that certain settings can’t be adjusted, or, worse, it will let you adjust them and then everything will break and you’ll need to reinstall. For the rest of this section, the exercises use the Play with Docker (PWD) online playground, but if you have a remote machine running Docker (here’s where your Raspberry Pi earns its keep), there are details in the readme file for this chapter’s source code on how to do the same without PWD.

We’ll start by making a remote Docker Engine accessible securely using mutual TLS. For that you need to generate certificate and key file pairs (the key file acts like a password for the certificate)--one for the Docker API and one for the client. Larger organizations will have an internal certificate authority (CA) and a team that owns the certs and can generate them for you. I’ve already done that, generating certs that work with PWD, so you can use those.

Try it now Sign in to Play with Docker at https://labs.play-with-docker.com and create a new node. In that session, run a container that will deploy the certs, and configure the Docker Engine on PWD to use the certs. Then restart Docker:

# create a directory for the certs:mkdir -p /diamol-certs# run a container that sets up the certs & config:docker container run -v /diamol-certs:/certs -v /etc/docker:/docker diamol/pwd-tls:server# kill docker & restart with new configpkill dockerddockerd &>/docker.log &

The container you ran mounted two volumes from the PWD node, and it copied the certs and a new daemon.json file from the container image onto the node. If you change the Docker Engine configuration, you need to restart it, which is what the dockerd commands are doing. You can see my output in figure 15.5--at this point the engine is listening on port 2376 (which is the convention for secure TCP access) using TLS.

Figure 15.5 Configuring a Play with Docker session so the engine listens using mutual TLS

There’s one last step before we can actually send traffic from the local machine into the PWD node. Click on the Open Port button and open port 2376. A new tab will open showing an error message. Ignore the message, and copy the URL of that new tab to the clipboard. This is the unique PWD domain for your session. It will be something like ip172-18-0-62-bo9pj8nad2eg008a76e0-2376.direct.labs.play-with-docker.com , and you’ll use it to connect from your local machine to the Docker Engine in PWD. Figure 15.6 shows how you open the port.

Figure 15.6 Opening ports in PWD lets you send external traffic into containers and the Docker Engine.

Your PWD instance is now available to be remotely managed. The certificates you’re using are ones I generated using the OpenSSH tool (running in a container--the Dockerfile is in the images/cert-generator folder if you’re interested in seeing how it works). I’m not going to go into detail on TLS certificates and OpenSSH because that’s a long detour neither of us would enjoy. But it is important to understand the relationship between the CA, the server cert, and the client cert. Figure 15.7 shows that.

Figure 15.7 A quick guide to mutual TLS--server certs and client certs identify the holder and share a CA.

If you’re going to use TLS to secure your Docker Engines, you’ll be generating one CA, one server cert for each Engine you want to secure, and one client cert for each user you want to allow access. Certs are created with a lifespan, so you can make short-lived client certs to give temporary access to a remote Engine. All of that can be automated, but there’s still overhead in managing certificates.

When you configure the Docker Engine to use TLS, you need to specify the paths to the CA cert, and the server cert and key pair. Listing 15.2 shows the TLS setup that has been deployed on your PWD node.

Listing 15.2 The Docker daemon configuration to enable TLS access

{ 'hosts': ['unix:///var/run/docker.sock', 'tcp://0.0.0.0:2376'], 'tls': true, 'tlscacert': '/diamol-certs/ca.pem', 'tlskey': '/diamol-certs/server-key.pem', 'tlscert': '/diamol-certs/server-cert.pem'}

Now that your remote Docker Engine is secured, you can’t use the REST API with curl or send commands using the Docker CLI unless you provide the CA certificate, client certificate, and client key. The API won’t accept any old client cert either--it needs to have been generated using the same CA as the server. Attempts to use the API without client TLS are rejected by the Engine. You can use a variation of the image you ran on PWD to download the client certs on your local machine, and use those to connect.

Try it now Make sure you have the URL for port 2376 access to PWD--that’s how you’ll connect from your local machine to the PWD session. Use the domain for your session that you copied earlier when you opened port 2376. Try connecting to the PWD engine:

# grab your PWD domain from the address bar - something like# ip172-18-0-62-bo9pj8nad2eg008a76e0-6379.direct.labs.play-with-# docker.com# store your PWD domain in a variable - on Windows:$pwdDomain='<your-pwd-domain-from-the-address-bar>'# OR Linux:pwdDomain='<your-pwd-domain-goes-here>'# try accessing the Docker API directly:curl 'http://$pwdDomain/containers/json'# now try with the command line:docker --host 'tcp://$pwdDomain' container ls# extract the PWD client certs onto your machine:mkdir -p /tmp/pwd-certscd ./ch15/exercisestar -xvf pwd-client-certs -C /tmp/pwd-certs# connect with the client certs:docker --host 'tcp://$pwdDomain' --tlsverify --tlscacert /tmp/pwd-certs/ca.pem --tlscert /tmp/pwd-certs/client-cert.pem --tlskey /tmp/pwd-certs/client-key.pem container ls# you can use any Docker CLI commands: docker --host 'tcp://$pwdDomain' --tlsverify --tlscacert /tmp/pwd-certs/ca.pem --tlscert /tmp/pwd-certs/client-cert.pem --tlskey /tmp/pwd-certs/client-key.pem container run -d -P diamol/apache

It’s a little cumbersome to pass the TLS parameters to every Docker command, but you can also capture them in environment variables. If you don’t provide the right client cert, you’ll get an error, and when you do provide the certs, you have complete control over your Docker Engine running in PWD from your local machine. You can see that in figure 15.8.

Figure 15.8 You can only work with a TLS-secured Docker Engine if you have the client certs.

The other option for secure remote access is SSH; the advantage here is that the Docker CLI uses the standard SSH client, and there’s no need to make any config changes to the Docker Engine. There are no certificates to create or manage, as authentication is handled by the SSH server. On your Docker machine you need to create a system user for everyone you want to permit remote access; they use those credentials when they run any Docker commands against the remote machine.

Try it now Back in your PWD session, make a note of the IP address for node1, and then click to create another node. Run these commands to manage the Docker Engine that’s on node1 from the command line on node2 using SSH:

# save the IP address of node1 in a variable:node1ip='<node1-ip-address-goes-here>'# open an SSH session to verify the connection:ssh root@$node1ipexit# list the local containers on node2:docker container ls# and list the remote containers on node1: docker -H ssh://root@$node1ip container ls

Play with Docker makes this very simple, because it provisions nodes with all they need to connect to each other. In a real environment you’d need to create users, and if you want to avoid typing passwords you’d also need to generate keys and distribute the public key to the server and the private key to the user. You can see from my output in figure 15.9 that this is all done in the Play with Docker session, and it works with no special setup.

Figure 15.9 Play with Docker configures the SSH client between nodes so you can use it with Docker.

Ops people will have mixed feelings about using Docker over SSH. On the one hand, it’s much easier than managing certificates, and if your organization has a lot of Linux admin experience, it’s nothing new. On the other hand, it means giving server access to anyone who needs Docker access, which might be more privilege than they need. If your organization is primarily Windows, you can install the OpenSSH server on Windows and use the same approach, but it’s very different from how admins typically manage Windows server access. TLS might be a better option in spite of the certificate overhead because it’s all handled within Docker and it doesn’t need an SSH server or client.

Securing access to your Docker Engine with TLS or SSH gives you encryption (the traffic between the CLI and the API can’t be read on the network) and authentication (users have to prove their identity in order to connect). The security doesn’t provide authorization or auditing, so you can’t restrict what a user can do, and you don’t have any record of what they did do. That’s something you’ll need to be aware of when you consider who needs access to which environments. Users also need to be careful which environments they use--the Docker CLI makes it super-easy to switch to a remote engine, and it’s a simple mistake to delete volumes containing important test data because you thought you were connected to your laptop.

15.3 Using Docker Contexts to work with remote engines

You can point your local Docker CLI to a remote machine using the host parameter, along with all the TLS cert paths if you’re using a secured channel, but it’s awkward to do that for every command you run. Docker makes it easier to switch between Docker Engines using Contexts. You create a Docker Context using the CLI, specifying all the connection details for the Engine. You can create multiple contexts, and all the connection details for each context are stored on your local machine.

Try it now Create a context to use your remote TLS-enabled Docker Engine running in PWD:

# create a context using your PWD domain and certs:docker context create pwd-tls --docker 'host=tcp://$pwdDomain,ca=/tmp/pwd-certs/ca.pem,cert=/tmp/pwd-certs/client-cert.pem,key=/tmp/pwd-certs/client-key.pem'# for SSH it would be:# docker context create local-tls --docker 'host=ssh://user@server'# list contexts: docker context ls

You’ll see in your output that there’s a default context that points to your local Engine using the private channel. My output in figure 15.10 is from a Windows machine, so the default channel uses named pipes. You’ll also see that there’s a Kubernetes endpoint option--you can use Docker contexts to store the connection details for Kubernetes clusters too.

Figure 15.10 Adding a new context by specifying the remote host name and the TLS certificate paths

Contexts contain all the information you need to switch between local and remote Docker Engines. This exercise used a TLS-secured engine, but you can run the same command with an SSH-secured engine by replacing the host parameter and cert paths with your SSH connection string.

Contexts can connect your local CLI to other machines on your local network or on the public internet. There are two ways to switch contexts--you can do it temporarily for the duration of one terminal session, or you can do it permanently so it works across all terminal sessions until you switch again.

Try it now When you switch contexts, your Docker commands are sent to the selected engine--you don’t need to specify host parameters. You can switch temporarily with an environment variable or permanently with the contextuse command:

# switch to a named context with an environment variable - this is the# preferred way to switch contexts, because it only lasts for this# session# on Windows:$env:DOCKER_CONTEXT='pwd-tls'# OR Linux:export DOCKER_CONTEXT='pwd-tls'# show the selected context:docker context ls# list containers on the active context:docker container ls# switch back to the default context - switching contexts this way is# not recommended because it's permanent across sessions:docker context use default# list containers again:docker container ls

The output is probably not what you expect, and you need to be careful with contexts because of these different ways of setting them. Figure 15.11 shows my output, with the context still set to the PWD connection, even though I’ve switched back to the default.

Figure 15.11 There are two ways to switch contexts, and if you mix them you’ll get confused.

The context you set with dockercontextuse becomes the system-wide default. Any new terminal windows you open, or any batch process you have running Docker commands, will use that context. You can override that using the DOCKER_CONTEXT environment variable, which takes precedence over the selected context and only applies to the current terminal session. If you regularly switch between contexts, I find that it’s a good practice to always use the environment variable option and leave the default context as your local Docker Engine. Otherwise it’s easy to start the day by clearing out all your running containers, forgetting that yesterday you set your context to use the production server.

Of course, you shouldn’t need to regularly access the production Docker servers. As you get further along your container journey, you’ll take more advantage of the easy automation Docker brings and get to a place where the only users with access to Docker are the uber-admins and the system account for the CI/CD pipeline.

15.4 Adding continuous deployment to your CI pipeline

Now that we have a remote Docker machine with secure access configured, we can write a complete CI/CD pipeline, building on the work we did with Jenkins in chapter 11. That pipeline covered the continuous integration (CI) stages--building and testing the app in containers and pushing the built image to a Docker registry. The continuous Deployment (CD) stages add to that, deploying to a testing environment for final signoff and then to production.

The difference between the CI stages and the CD stages is that the CI builds all happen locally using the Docker Engine on the build machine, but the deployment needs to happen with the remote Docker Engines. The pipeline can use the same approach we’ve taken in the exercises, using Docker and Docker Compose commands with a host argument pointing to the remote machine, and providing security credentials. Those credentials need to live somewhere, and it absolutely must not be in source control--the people who need to work with source code are not the same people who need to work with production servers, so the credentials for production shouldn’t be widely available. Most automation servers let you store secrets inside the build server and use them in pipeline jobs, and that separates credential management from source control.

Try it now We’ll spin up a local build infrastructure similar to chapter 11, with a local Git server, Docker registry, and Jenkins server all running in containers. There are scripts that run when this Jenkins container starts to create credentials from the PWD certificate files on your local machine, so the CD stages will deploy to PWD:

# switch to the folder with the Compose files:cd ch15/exercises/infrastructure# start the containers - using Windows containers:docker-compose -f ./docker-compose.yml -f ./docker-compose-windows.yml up -d# OR with Linux containers:docker-compose -f ./docker-compose.yml -f ./docker-compose-linux.yml up -d

When the containers are running, browse to Jenkins at http:/ /localhost:8080/credentials and log in with usernamediamol and password diamol . You’ll see that the certificates for the Docker CA and the client connection are already stored in Jenkins--they were loaded from the PWD certs on your machine, and they’re available to use in jobs. Figure 15.12 shows the certificates loaded as Jenkins credentials.

Figure 15.12 Using Jenkins credentials to provide TLS certs for pipelines to connect to Docker on PWD

This is a fresh build infrastructure running in all-new containers. Jenkins is all configured and ready to go thanks to the automation scripts it uses, but the Git server needs some manual setup. You’ll need to browse to http:/ /localhost:3000 and complete the installation, create a user called diamol , and then create a repository called diamol . If you need a refresher on that, you can flip back to chapter 11--figures 11.3, 11.4, and 11.5 show you what to do.

The pipeline we’ll be running in this section builds a new version of the timecheck app from chapter 12, which just prints the local time every 10 seconds. The scripts are all ready to go in the source code for this chapter, but you need to make a change to the pipeline to add your own PWD domain name. Then when the build runs, it will run the CI stages and deploy from your local container to your PWD session. We’ll pretend PWD is both the user-acceptance test environment and production.

try it now Open up the pipeline definition file in the folder ch15/exercises --use Jenkinsfile if you’re running Linux containers and Jenkinsfile.windows if you’re using Windows containers. In the environment section there are variables for the Docker registry domain and the User Acceptance Testing (UAT) and production Docker Engines. Replace pwd-domain with your actual PWD domain, and be sure to include the port, :80 , after the domain--PWD listens on port 80 externally, and it maps that to port 2376 in the session:

environment { REGISTRY = 'registry.local:5000' UAT_ENGINE = 'ip172-18-0-59-bngh3ebjagq000ddjbv0-2376.direct.labs.play-with-docker.com:80' PROD_ENGINE = 'ip172-18-0-59-bngh3ebjagq000ddjbv0-2376.direct.labs.play-with-docker.com:80'}

Now you can push your changes to your local Git server:

git remote add ch15 http://localhost:3000/diamol/diamol.gitgit commit -a -m 'Added PWD domains'git push ch15# Gogs will ask you to login -# use the diamol username and password you registered in Gogs

Now browse to Jenkins at http:/ /localhost:8080/job/diamol/ and click Build Now.

This pipeline starts in the same way as the chapter 11 pipeline: fetching the code from Git, building the app with a multi-stage Dockerfile, running the app to test that it starts, and then pushing the image to the local registry. Then come the new deployment stages: first there’s a deployment to the remote UAT engine and then the pipeline stops, waiting for human approval to continue. This is a nice way to get started with CD, because every step is automated, but there’s still a manual quality gate, and that can be reassuring for organizations that aren’t comfortable with automatic deployments to production. You can see in figure 15.13 that the build has passed up to the UAT stage, and now it’s stopped at Await Approval.

Figure 15.13 The CI/CD pipeline in Jenkins has deployed to UAT and is awaiting approval to continue.

Your manual approval stage could involve a whole day of testing with a dedicated team, or it could be a quick sanity check that the new deployment looks good in a production-like environment. When you’re happy with the deployment, you go back to Jenkins and signal your approval. Then it goes on to the final stage--deploying to the production environment.

Try it now Back in your PWD session, check that the timecheck container is running and that it’s writing out the correct logs:

docker container lsdocker container logs timecheck-uat_timecheck_1

I’m sure everything will be fine, so back to Jenkins and click the blue box in the Await Approval stage. A window pops up asking for confirmation to deploy--click Do It! The pipeline will continue.

It’s getting exciting now--we’re nearly there with our production deployment. You can see my output in figure 15.14, with the UAT test in the background and the approval stage in the foreground.

Figure 15.14 The UAT deployment has worked correctly and the app is running in PWD. On to production!

The CD stages of the pipeline don’t do anything more complex than the CI stages. There’s a script file for each stage that does the work using a single Docker Compose command, joining together the relevant override files (this could easily be a dockerstackdeploy command if the remote environment is a Swarm cluster). The deployment scripts expect the TLS certificate paths and Docker host domain to be provided in environment variables, and those variables are set up in the pipeline job.

It’s important to keep that separation between the actual work that’s done with the Docker and Docker Compose CLIs, and the organization of the work done in the pipeline. That reduces your dependency on a particular automation server and makes it easy to switch between them. Listing 15.3 shows part of the Jenkinsfile and the batch script that deploy to UAT.

Listing 15.3 Passing Docker TLS certs to the script file using Jenkins credentials

# the deployment stage of the Jenkinsfile:stage('UAT') { steps { withCredentials( [file(credentialsId: 'docker-ca.pem', variable: 'ca'), file(credentialsId: 'docker-cert.pem', variable: 'cert'), file(credentialsId: 'docker-key.pem', variable: 'key')]) { dir('ch15/exercises') { sh 'chmod +x ./ci/04-uat.bat' sh './ci/04-uat.bat' echo 'Deployed to UAT' } } }}# and the actual script just uses Docker Compose:docker-compose --host tcp://$UAT_ENGINE --tlsverify --tlscacert $ca --tlscert $cert --tlskey $key -p timecheck-uat -f docker-compose.yml -f docker-compose-uat.yml up -d

Jenkins provides the TLS certs for the shell script from its own credentials. You could move this build to GitHub Actions and you’d just need to mimic the workflow using secrets stored in the GitHub repo--the build scripts themselves wouldn’t need to change. The production deployment stage is almost identical to UAT; it just uses a different set of Compose files to specify the environment settings. We’re using the same PWD environment for UAT and production, so when the job completes you’ll be able to see both deployments running.

Try it now Back to the PWD session for one last time, and you can check that your local Jenkins build has correctly deployed to the UAT and production environments:

docker container lsdocker container logs timecheck-prod_timecheck_1

My output is in figure 15.15. We have a successful CI/CD pipeline running from Jenkins in a local container and deploying to two remote Docker environments (which just happen to be the same one in this case).

Figure 15.15 The deployment on PWD. To use a real cluster, I’d just change the domain name and certs.

This is amazingly powerful. It doesn’t take any more than a Docker server to run containers for different environments and a machine running Docker for the CI/CD infrastructure. You can prove this with a pipeline for your own app in a day (assuming you’ve already Dockerized the components), and the path to production just requires spinning up clusters and changing the deployment targets.

Before you plan out your production pipeline, however, there is one other thing to be aware of when you make your Docker Engine available remotely--even if it is secured. That’s the access model for Docker resources.

15.5 Understanding the access model for Docker

This doesn’t really need a whole section because the access model for Docker resources is very simple, but it gets its own section to help it stand out. Securing your Engine is about two things: encrypting the traffic between the CLI and API, and authenticating to ensure the user is allowed access to the API. There’s no authorization--the access model is all or nothing. If you can’t connect to the API, you can’t do anything, and if you can connect to the API, you can do everything.

Whether that frightens you or not depends on your background, your infrastructure, and the maturity of your security model. You might be running internal clusters with no public access, using a separate network for your managers, with restricted IP access to that network, and you rotate the Docker CA every day. That gives you defense in depth, but there’s still an attack vector from your own employees to consider (yes, I know Stanley and Minerva are great team players, but are you really sure they aren’t crooks? Especially Stanley).

There are alternatives, but they get complicated quickly. Kubernetes has a role-based access control model, as does Docker Enterprise, so you can restrict which users can access resources, and what they can do with those resources. Or there’s a GitOps approach that turns the CI/CD pipeline inside out, using a pull-based model so the cluster is aware when a new build has been approved, and the cluster deploys the update itself. Figure 15.16 shows that--there are no shared credentials here because nothing needs to connect to the cluster.

Figure 15.16 The brave new world of GitOps--everything is stored in Git, and clusters start deployments.

GitOps is a very interesting approach, because it makes everything repeatable and versioned--not just your application source code and the deployment YAML files, but the infrastructure setup scripts too. It gives you the single source of truth for your whole stack in Git, which you can easily audit and roll back. If the idea appeals to you but you’re starting from scratch--well, it will take you a chunk of time to get there, but you can start with the very simple CI/CD pipelines we’ve covered in this chapter and gradually evolve your processes and tools as you gain confidence.

15.6 Lab

If you followed along with the CD exercise in section 15.4, you may have wondered how the deployment worked, because the CI stage pushed the image to your local registry and PWD can’t access that registry. How did it pull the image to run the container? Well, it didn’t. I cheated. The deployment override files use a different image tag, one from Docker Hub that I built and pushed myself (sorry if you feel let down, but all the images from this book are built with Jenkins pipelines, so it’s the same thing really). In this lab you’re going to put that right.

Docker Port 2375 Connection Refused

The missing part of the build is in stage 3, which just pushes the image to the local registry. In a typical pipeline there would be a test stage on a local server that could access that image before pushing to the production registry, but we’ll skip that and just add another push to Docker Hub. This is the goal:

Docker Open Port 2375

- Tag your CI image so it uses your account on Docker Hub and a simple “3.0” tag.

- Push the image to Docker Hub, keeping your Hub credentials secure.

- Use your own Docker Hub image to deploy to the UAT and production environments.

There are a few moving pieces here, but go through the existing pipeline carefully and you’ll see what you need to do. Two hints: First, you can create a username/ password credential in Jenkins and make it available in your Jenkinsfile using the withCredentials block. Second, the open port to a PWD session sometimes stops listening, so you may need to start new sessions that will need new PWD domains in the Jenkinsfile.

Docker Port 2375

My solution on GitHub started as a copy of the exercises folder, so if you want to see what I changed, you can compare the files as well as check the approach: https:// github.com/sixeyed/diamol/blob/master/ch15/lab/README.md.